An Overview of Cognitive Computing

Here’s a working definition of the term: Cognitive computing describes computing systems that are designed to emulate human or near-human intelligence, so they can perform tasks otherwise delegated to humans in a manner that is similar or even superior to the human’s performance of the task.

IBM, whose Watson computer system with its natural language processing (NLP) and machine learning capabilities is perhaps the best known “face” of cognitive computing, coined the term cognitive computing to describe systems that could learn, reason, and interact in human-like fashion. Cognitive computing spans both hardware and software.

As such, cognitive computing brings together two quite different fields: cognitive science and computer science. Cognitive science studies the workings of the brain, while computer science involves the theory and design of computer systems. Together, they attempt to create computer systems that mimic the cognitive functions of the human brain.

Why would we want computer systems that can work like human brains? We live in a world that generates profuse amounts of information. Most estimates find that the digital universe is doubling in size as least every two years, with data expanding more than 50 times from 2010 to 2020. Organizing, manipulating, and making sense of that vast amount of data exceeds human capacity. Cognitive computers have a mission to assist us.

You’ve probably crossed paths with cognitive computing applications already, perhaps without even knowing it. Currently, cognitive computing is helping doctors to diagnose disease, weathermen to predict storms, and retailers to gain insight into how customers behave.

Some of the greatest demand for cognitive computing solutions comes from big data analytics, where the quantities of data surpass human ability to parse but offer profit-generating insights that cannot be ignored. Lower-cost, cloud-based cognitive computing technologies are becoming more accessible, and aside from the established tech giants — such as IBM, Google, and Microsoft — a number of smaller players have been making moves to grab a piece of the still-young cognitive computing market.

Little wonder, then, that the global market for cognitive computing is expected to grow at an astronomical 49.9 percent compound annual growth rate from 2017 to 2025, burgeoning from just under $30 billion to over $1 trillion, according to CMFE News.

What Is the Definition of Cognitive Intelligence?

To understand what cognitive computing is designed to do, we can benefit from first understanding the quality it seeks to emulate: cognitive intelligence.

Cognitive intelligence is the human ability to think in abstract terms to reason, plan, create solutions to problems, and learn. It is not the same as emotional intelligence, which is, according to psychologists Peter Salovey and John D. Mayer, “the ability to monitor one’s own and others’ feelings and emotions, to discriminate among them, and to use this information to guide one’s thinking and actions.” Put simply, cognitive intelligence is the application of mental abilities to solve problems and answer questions, while emotional intelligence is the ability to effectively navigate the social world.

Cognitive intelligence is consistent with psychologist Phillip L. Ackerman’s concept of intelligence as knowledge, which posits that knowledge and process are both part of the intellect. Cognitive computing seeks to design computer systems that can perform cognitive processes in the same way that the human brain performs them.

What Is Meant by Cognitive Computing?

IBM describes cognitive computing as “systems that learn at scale, reason with purpose, and interact with humans naturally.” Within the computer science of cognitive computing, a number of disciplines converge and intersect, but the two broadest and most significant are artificial intelligence (AI) and signal processing.

There’s no widely accepted definition of cognitive computing. In 2014, however, the Cognitive Computing Consortium offered a cross-disciplinary definition by bringing together representatives from 18 major players in the cognitive computing universe, including Google, IBM, Oracle, and Microsoft/Bing. They sought to define the field by its capabilities and functions: as an advancement that would enable computer systems to handle complex human problems, which are dynamic, information-rich, sometimes conflicting, and require understanding of context. In practice, it will make a new class of problems computable.

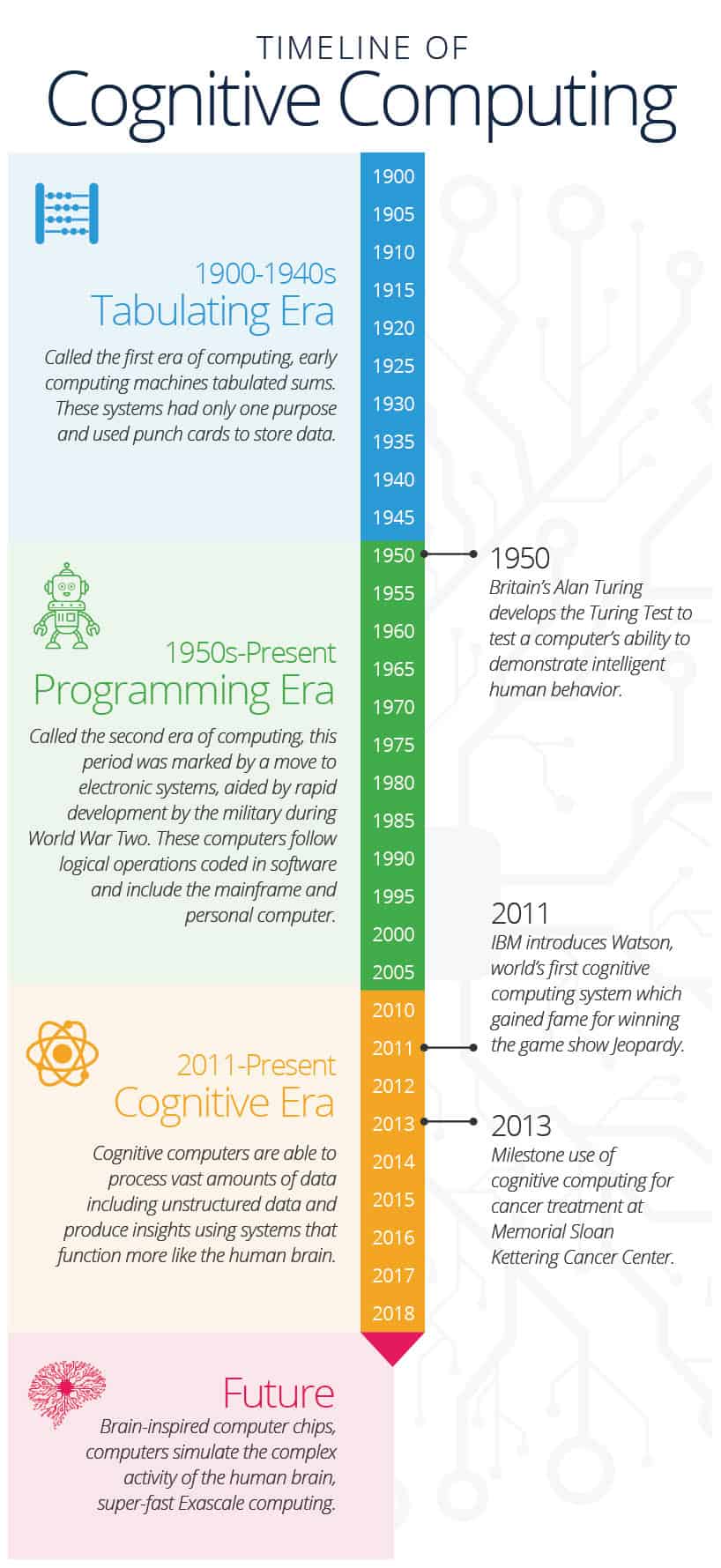

As a result, cognitive computing promises a paradigm shift to computing systems that can mimic the sensory, processing, and responsive capacities of the human brain. It’s often described as computing’s “third era.” This follows the first era of tabulating systems, where computers that could count (circa 1900) and the second era of programmable systems, which allowed for general-purpose computers that could be programmed to perform specific functions (circa 1950).

What Is Cognitive Technology?

Cognitive technology, another term for cognitive computing or cognitive systems, enables us to create systems that will acquire information, process it, and act upon it, learning as they are exposed to more data. Data and reasoning can be linked with adaptive output for particular users or purposes. These systems can prescribe, suggest, teach, and entertain.

IBM Research’s Watson for Oncology is a cognitive computing system that uses machine learning to help doctors select the best treatment options for cancer patients, based on evidence. The cognitive system helps the oncologist harness big data from past treatments.

In simpler applications, software based on cognitive-computing techniques is already widely used, such as by chatbots to field customer queries or provide news.

Cognitive computing systems can redefine relationships between human beings and their digitally pervasive, data-rich environments. Natural language processing capabilities enable them to communicate with people using human language. One hallmark of a true cognitive computing system is its ability to generate thoughtful responses to input, rather than being limited to prescribed responses.

Among the other capabilities of cognitive computing systems are pattern recognition (classifying data inputs using a defined set of key features) and data mining (inferring knowledge from large sets of data). Both capabilities are based on machine learning techniques in which computing systems “learn” by being exposed to data in a process called training, wherein the systems figure out how to arrive at solutions to problems.

These capabilities drive one of cognitive computing’s most powerful promises: the ability to make sense of unstructured data. Structured data is information in the form that computers handle so well; think of it as pieces of data arranged neatly into a spreadsheet, where the computer knows exactly what each cell contains. Unstructured data, on the other hand, is data that doesn’t fit neatly into boxes. IBM breaks unstructured data down into four forms: language, speech, vision, and data insights. Unstructured data typically requires context in order to understand.

Computing systems typically struggle to handle unstructured data. With the increasing amount of analyzable data in unstructured form, the inability to extract insights from it represents a big drawback. According to the International Data Corporation, only one percent of the world’s data is ever analyzed, and with estimates that unstructured data makes up around 80 percent of it, that’s a lot of unproductive data.

The Components of a Cognitive Computing System

A full-blown cognitive computing system includes the following components:

- Artificial Intelligence (AI): AI is the demonstration of intelligence by machines, which relates to a machine sensing or otherwise perceiving its environment and then taking steps to achieve the goals it was designed to reach. Early AI was more rudimentary, such as data search, and cognitive computing is generally considered an advanced form of AI.

- Algorithms: Algorithms are sets of rules that define the procedures that need to be followed to solve a particular type of problem.

- Machine Learning and Deep Learning: Machine learning is the use of algorithms to dig through and parse data, learn from it, and then apply learning to perform tasks in the future. Deep learning is a subfield of machine learning modeled on the neural network of the human brain that uses a layered structure of algorithms. Deep learning relies on much larger amounts of data and solves problems from end to end, instead of breaking them into parts, as in traditional machine learning approaches.

- Data Mining: Data mining is the process of inferring knowledge and data relationships from large, pre-existing datasets.

- Reasoning and Decision Automation: Reasoning is the term used to describe the process of a cognitive computing system that applies its learning to achieve objectives. It’s not akin to human reasoning, but is meant to mimic it. The outcome of a reasoning process may be decision automation, in which software autonomously generates and implements a solution to a problem.

- Natural Language Processing (NLP) and Speech Recognition: Natural language processing is the use of computing techniques to understand and generate responses to human languages in their natural — that is, conventionally spoken and written — forms. NLP spans two subfields: natural language understanding (NLU) and natural language generation (NLG). A closely related technique is speech recognition, which converts speech input into written language that is suitable for NLP.

- Human-Computer Interaction and Dialogue and Narrative Generation: To facilitate the expansion of human cognition through cognitive computing, the interface between human and computer needs to be smooth, and the communication needs to be seamless — even interesting. This aspect is termed human-computer interaction, and a big part of it is NLP. Yet, there’s also an attempt to make cognitive computing systems more personable, since this will likely improve the quality and frequency of communication between humans and computers.

- Visual Recognition: Visual recognition uses deep learning algorithms to analyze images and identify objects, such as faces.

- Emotional Intelligence: For a long time, cognitive computing steered clear of trying to mimic emotional intelligence, but fascinating projects, such as the MIT startup Affectiva, are trying to build computing systems that can understand human emotion through such indicators as facial expression and then generate nuanced responses. The goal is to create cognitive computing systems that are strikingly human-like because of their ability to read emotional cues.

The Hallmarks of Cognitive Computing

Cognitive computing systems are adaptive, which means they learn new information and reasoning to keep on track to meet changing objectives. They can be designed to assimilate new data in real time (or something close to it) — this enables them to deal with unpredictability.

Cognitive systems are also interactive — not only with the human beings whom they work for (or with), but with other technology architectures and with human beings in the environment. Further, they are iterative, which means they use data to help solve ambiguous or incomplete problems in a repeating cycle of analysis. They are also stateful, which means they remember previous interactions and can develop knowledge incrementally instead of requiring all previous information to be explicitly stated in each new interaction request.

Lastly, cognitive systems are typically contextual in the way they handle problems, which means they draw data from changing conditions, such as location, time, user profile, regulations, and goals to inform their reasoning and interactions. They’re capable of accessing multiple sources of information and using sensory perception to understand such things as auditory or gestural inputs from interactions with human beings. And, of course, they’re capable of making sense of both structured and unstructured data, which improves their ability to understand context.

In summary, cognitive computing is the creation of computer systems that are capable of solving problems that previously called for human or near-human intelligence. In some applications, they’re being designed to do this autonomously, while in others, they’re being designed to assist human beings and expand human cognitive capabilities, to supplement rather than replace humans.

In order to assist humans or work autonomously, cognitive systems have to be self-learning, a task which they accomplish through the use of machine learning algorithms. These machine learning capabilities, along with reasoning and natural language processing, constitute the basis of artificial intelligence. AI allows these systems to simulate human thought processes — and, when dealing with big data, to find patterns and see reason.

What Is Cognition in AI?

Cognitive computing systems work like the human brain. They are able to process asynchronous information, to adapt and respond to events, and to carry out multiple cognitive processes simultaneously to solve a specific problem.

How are the concepts of cognitive computing and AI related, in theory and in practice? It depends on who you ask.

IBM says that while cognitive computing shares attributes with artificial intelligence, “the complex interplay of disparate components, each comprising their own mature disciplines” differentiates cognitive computing from AI. Simplified, that means that while artificial intelligence powers machines that can do human tasks, cognitive computing goes several steps further to create machines that can actually think like humans.

Another key difference lies in the way artificial intelligence and cognitive computing are designed to tackle real-world problems. While artificial intelligence is meant to replace human intelligence, cognitive computing is meant to supplement and facilitate it. Let’s pull from our earlier example: While, as a cognitive computing system, IBM Watson for Oncology sifts through masses of previous cancer treatment cases to help oncologists come up with evidence-based modes of treatment, a hypothetical artificial intelligence system performing a similar role would not only perform an analysis of previous treatment cases, but also decide upon and implement a course of treatment for the patient.

But other experts see the distinction differently, and believe cognitive computing is really just a subfield of artificial intelligence. Under this theory, multiple AI systems mimic cognitive behaviors we associate with thinking — and even that may be going too far. Gartner VP Tom Austin, who leads an AI technology research committee, has been quoted as saying that the term cognitive is only “marketing malarkey,” based on the incorrect assumption that machines can think.

That last opinion certainly has some evidence behind it. Because AI has gained some negative associations (think of all the books and movies about robot uprisings), some in the artificial intelligence community believe that the term cognitive computing is a convenient euphemism for the latest wave of AI technologies, without all the baggage of the term AI.

Cognitive Analytics as Part of Cognitive Computing

Cognitive computing is being harnessed to drive data analytics in an especially powerful way.

Cognitive technologies are especially suited to processing and analyzing large sets of unstructured data. (Unstructured data, you’ll remember, is data that’s not easily assigned to tabular forms and that requires context to parse — typically, it is gathered with senses such as vision or hearing.)

Since unstructured data is hard to organize, data analytics based on conventional computing requires data to be manually tagged with metadata (data that provides information about other data) before a computer can conduct any useful analysis or insight generation. That’s a huge headache if you’re dealing with even moderately large data sets.

Cognitive analytics solves this problem because they do not require unstructured data to be tagged with metadata. Pattern recognition capabilities transform unstructured data into a structured form, thereby unlocking it for analysis.

Machine learning enables these analytics to adapt to different contexts, and NLP can make data insights understandable for human users.

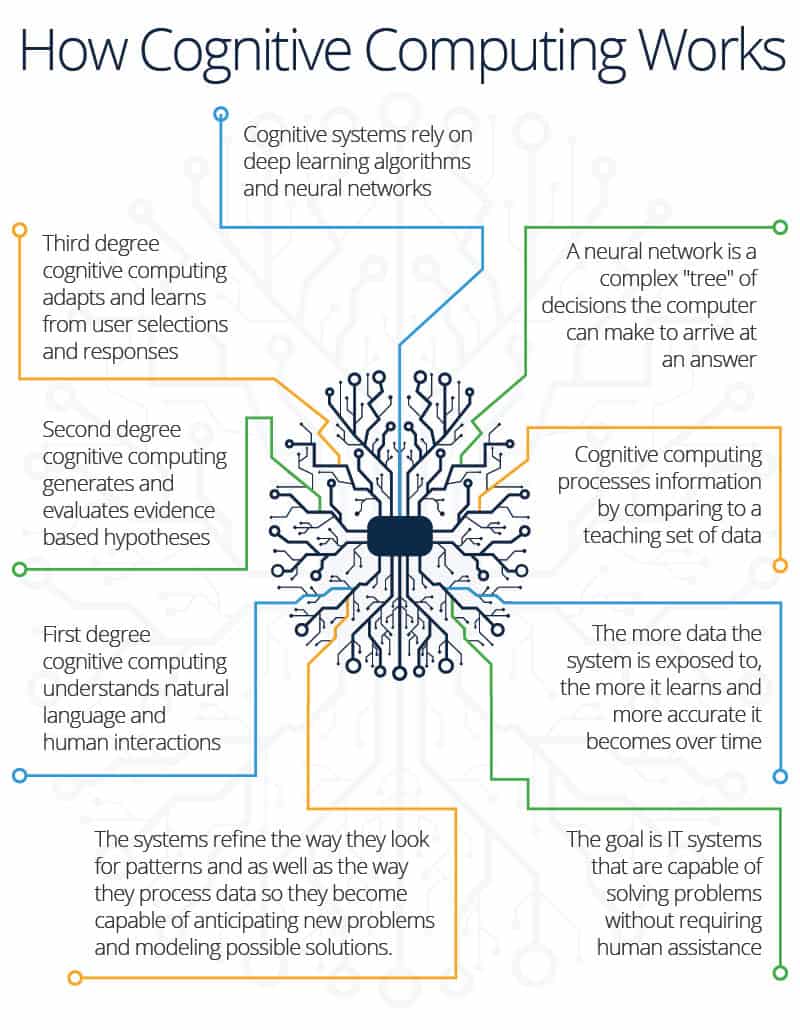

How Cognitive Computing Works

So, how does cognitive computing work in the real world? Cognitive computing systems may rely on deep learning and neural networks. Deep learning, which we touched on earlier in this article, is a specific type of machine learning that is based on an architecture called a deep neural network.

The neural network, inspired by the architecture of neurons in the human brain, comprises systems of nodes — sometimes termed neurons — with weighted interconnections. A deep neural network includes multiple layers of neurons. Learning occurs as a process of updating the weights between these interconnections. One way of thinking about a neural network is to imagine it as a complex decision tree that the computer follows to arrive at an answer.

In deep learning, the learning takes place through a process called training. Training data is passed through the neural network, and the output generated by the network is compared to the correct output prepared by a human being. If the outputs match (rare at the beginning), the machine has done its job. If not, the neural network readjusts the weighting of its neural interconnections and tries again. As the neural network processes more and more training data — in the region of thousands of cases, if not more — it learns to generate output that more closely matches the human-generated output. The machine has now “learned” to perform a human task.

Preparing training data is an arduous process, but the big advantage of a trained neural network is that once it has learned to generate reliable outputs, it can tackle future cases at a greater speed than humans ever could, and its learning continues. The training is an investment that pays off over time, and machine learning researchers have come up with interesting ways to simplify the preparation of training data, such as by crowdsourcing it through services like Amazon Mechanical Turk.

When combined with NLP capabilities, IBM’s Watson cognitive computing combines three “transformational technologies.” The first is the ability to understand natural language and human communication; the second is the ability to generate and evaluate evidence-based hypotheses; and the third is the ability to adapt and learn from its human users.

While work is aimed at computers that can solve human problems, the goal is not to push human beings’ cognitive abilities out of the picture by having computing systems replace people outright, but instead to supplement and extend them.

A Brief History of Cognitive Computing

Cognitive computing is the latest step in a long history of AI development. Early AI began as a kind of intelligent search function that resembled the ability to comb through decision trees in applications, such as simple games and learning through a reward-based system. The downside was that even simple games like tic-tac-toe have vastly intricate decision trees, and searching them quickly becomes unworkable.

After AI-as-search came supervised and unsupervised learning algorithms called perceptrons and clustering algorithms, respectively. These were followed by decision trees, which are predictive models that track a series of observations to their logical conclusions. Decision trees were succeeded by rules-based systems that combined knowledge bases with rules to perform reasoning tasks and reach conclusions.

The machine learning era of AI heralded increased complexity of neural networks enabled by algorithms, such as backpropagation, which allowed for error correction in multi-layered neural networks. The convolutional neural network (CNN) was one multi-layered neural network architecture used in image processing and video recognition; the long short-term memory (LSTM) permitted backward feeding of nodes for applications dealing with time-series data.

CNN and LSTM are really applications of deep learning. While deep learning has been applied to complex problems, it’s also afflicted by what’s known as the “black box problem:” In any output that’s generated by a deep learning program, there’s no way of knowing what logical factors the system took into account to arrive at the output.

What You Need to Know about Cognitive Computing — and Why

Whether you are a leader, technology professional, or manager, you may be wondering what cognitive computing can offer your organization.

The benefits of cognitive computing generally fall within three areas where it portends paradigm shifts: discovery (or insight), engagement, and decision making.

Imagine being able to harness the natural language processing capabilities of cognitive computing to build highly detailed customer profiles (including their posts on social media) from unstructured data.

With this insight, you can identify and target customers to advertise products that you not only know they might like, but that they might like at a particular time, such as when they graduate college, get engaged, or are expecting a baby. Cognitive computing will enable you to discover customer insights and behavior patterns that will take targeted advertising to the next level.

Cognitive computing also has the potential to help keep your customers engaged. You might achieve this by using cognitive computing systems to autonomously handle simple customer service queries or help human agents by rapidly analyzing histories of past interactions. Deploying a cognitive system in customer service has the potential to increase consistency and speed up discovery of what customers find truly helpful.

In fields where cognitive computing will remain an adjunct, it can streamline the data collection process for human agents, such as with loan processing. Healthcare and finance have received the most attention, but cognitive computing is being applied everywhere, including manufacturing, construction, and energy.

Given the benefits, a 2017 IBM survey of thousands of executives across various industries found 50 percent said they planned to adopt cognitive computing by 2019 and virtually all said they expected to reap a 15 percent return on investment.

Some of the hottest areas in the cognitive computing space (and biggest recipients of investment) have included machine learning, computer vision, robotics, speech recognition, and natural language processing, according to a 2015 Deloitte analysis.

While cognitive computing holds promise, it still serves best as a supplement or tool for human workers — not a replacement for them. So, cost savings might not be as great as some seem to think.

Moreover, cognitive computing runs on vast amounts of data, and the data needs to be collected, accessed, and leveraged to gain benefits. A business that doesn’t collect data to feed machine learning systems is wasting that capacity. Frequently, making best use of this data will require some structural change — and structural change, in turn, calls for buy-in from the executive suite.

Cognitive technologies may allow you to leverage data in ways you haven’t been able to before, but you need to have a plan for the output. That takes creative, practical thinking. After all, for a business, intelligence is only really useful if it is actionable.

The big names in tech, such as IBM and Alphabet, have been creating new business units to increase volume and generate revenue using cognitive technologies, with the end goal being to transform their business models. IBM, for example, pursues this through global development platforms like the Watson Developers Cloud, which centralizes resources and participants to speed up product development and release. Tech companies offer platform-as-a-service (PaaS) for businesses to run their own cognitive computing applications.

A 2013 McKinsey Global Institute report forecasts that the automation of knowledge work as a “disruptive technology” could have an economic impact of more than $5 trillion by 2025.

How Cognitive Computing Is Used

Cognitive computing has made a lot of creative applications possible.

The AI video software at Wimbledon can autonomously generate a highlights reel. How does it know which clips to include? It uses ambient noise, facial recognition, and sentiment analysis to determine which video clips generated the most crowd excitement.

Facial recognition is also used on social media sites like Facebook, whose DeepFace facial recognition software boasts an impressive 97 percent accuracy rate. And last year, Google’s machine learning-based voice recognition hit the threshold associated with human accuracy — 95 percent.

Have you wondered why so many people find it hard to stop watching Netflix? The company uses machine learning algorithms that come up with recommendations for new shows and movies, based on factors like what people watch, when they watch it, and what they don’t watch as well as where on the site you discovered the video. This application of cognitive computing is a kind of consumer behavior analysis, and Netflix contends it produces a value of $1 billion a year in consumer retention.

Chatbots, though not the most cutting-edge application of cognitive computing, also fill a variety of customer service roles, from helping you find a diamond (Rare Carat’s Rocky, powered by IBM’s Watson) to fielding your queries about air travel (Alaska Air’s Jenn).

“Narrowly scoped bots with clear capabilities and boundaries are where success is being seen today, and I believe that will continue, but there is also a need to ensure that users can easily discover new capabilities that are available,” Lucas notes.

Cognitive computing makes appearances in more high-stakes sectors as well, like fraud detection, risk assessment, and risk management at financial institutions and financial service providers.

For auditors, for example, cognitive computing means they don’t have to rely only on testing samples of financial data; they can, instead, check the complete record. And, for underwriters at insurance companies, who must comb through mountains of data when deciding whether to take on customers, cognitive computing can provide a dashboard view of pages upon pages of documents.

In both these applications, the benefits go beyond increased efficiency; they also reduce the risk of human error where it can be very costly. Geico, for example, is using Watson cognitive power to learn underwriting guidelines and read risk submissions.

In customer-facing applications, cognitive computing can help people navigate the complex savings and investment landscape. ANZ Bank in Australia does exactly this with financial services apps that examine investment options and provide recommendations based on relevant customer data: age, life stage, risk tolerance, and financial standing. The Brazilian bank Bradesco put Watson to work in its call center, where it fields customers’ queries.

Cognitive computing may even be used to predict weather. In January 2016, IBM acquired The Weather Company, applying cognitive technologies to understand weather patterns and help businesses leverage weather prediction data, so they can do such things as better manage outdoor facilities and integrate weather data with other services on the Internet of Things (IoT).

Cognitive computing is also being integrated into virtual-reality gaming. In February 2018, IBM and Unity announced a partnership that would bring AI functionality to the world’s number one gaming engine.

As a business professional, there are a few questions to ask before moving into cognitive computing. Machine learning is only workable (and promises the most value) in areas of your enterprise that are data intensive — visual, textual, or audio. Where do you have access to enough of this data to make a difference, and what difference are you trying to make, exactly?

Then, he recommends applying cognitive functionality to simple processes, such as image recognition. Neurality worked on a dating app that previously performed manual reviews of photos to weed out fake users. He says that with image recognition, the approval time dropped more than 70 percent.

“Cognitive computing projects cost millions. Lower your risk by trying one little thing. Then, build on that. Companies that go all in at once take years and years to get to a real cognitive system,” urges Costantini.

If you’re considering AI-style automated functionality, think about what you’re trying to achieve. Is your area of implementation one that will benefit from automated decision making, or will leveraging data allow you to create a personalized customer experience? Or, conversely, will sacrificing a human touch cost you?

“Cognitive computing is about understanding what people are trying to get done,” he explains. “The biologist and the chemist may be working on the same sample but want very different data… Give them the data that matters to the job they are trying to do,” Little points out.

You can accomplish this process by modeling the information in your organization, cataloging it, and assigning metadata. Analytics and federated architecture can make data more usable. Little advises that you focus your efforts on a few areas where having more information and stronger analysis capabilities would have an immediate impact.

Remember that cognitive computing doesn’t have to be implemented in outward-facing applications; you may stand to gain much more by using it to serve your employees rather than your customers. Combining the analytical capabilities of cognitive computing with human input can bring great results. Think about Watson for Oncology and how it combines evidence-based insights with expert human decision making.

“The real impact of cognitive computing is not in the headlines. It’s in the weeds,” says Coseer’s Krishna. “For example, we are working with a pharma company to accelerate the drafting of regulatory reports. It’s not like science fiction, where a computer automatically figures out new medicines. Not yet, at least. However, it brings drugs currently in the pipeline more quickly to the market and frees up scientists’ time to innovate more.”

Cognitive computing isn’t going to replace programmatic computing just yet, but if it lives up to its incredible promise, it’s going to be a revolutionary differentiator across industries.

What Is a Cognitive Platform?

Platforms like IBM’s Watson Platform, SAS’ Viya, and Cognitive Scale’s Cortex offer cognitive functionalities in Platform-as-a-Service (PaaS) arrangements. As SAS’s Executive Vice President Oliver Schabenberger described it in a 2016 interview with Huffington Post, customers want platforms to provide “speed and scale…flexibility, resilience, and elasticity.”

For now, cognitive implementations often focus on making existing processes cognitive. This means adding cognitive processes, from image recognition to speech recognition, by examining structured, tabular, numeric data to reading unstructured texts. Cognitive platforms offer these functionalities in a suite of services.

Tech writer Jennifer Zaino suggests thinking about cognitive platforms as “extending the ecosystem that will make it possible to create cognitive computing applications and bring them to the masses.” Cognitive platforms simplify implementations for companies, and therefore can be thought of as a way to bring cognitive computing to the public.

OSTHUS’ Little says that some companies prefer interconnected systems rather than platforms: “These companies are interested in buying things that allow them to use best of breed technology” rather than being confined to “a big one-off platform.”

Who’s Who in Cognitive Computing

IBM’S Watson is the closest thing to a household name in cognitive computing. Assembling an array of cognitive technologies that include natural-language processing, hypothesis generation, and evidence-based learning, Watson is capable of deep content analysis and evidence-based reasoning that allows it to bring computer cognition to a wide variety of fields, from oncology to jewelry. The true pioneers of artificial intelligence, IBM reported in 2015 that it has spent $26 billion in big data and analytics and spends nearly a third of its R&D budget on Watson.

Microsoft’s Cognitive Services are a set of APIs, SDKs, and services based on Microsoft’s machine learning portfolio. These are available to developers who want to harness the power of cognitive computing by implementing facial, vision, and speech recognition, natural language understanding, and emotion and video detection, among other things, into their applications. Cognitive Services works across a variety of platforms, including Android, iOs, and Windows, and it provides the cognitive power behind Progressive Insurance’s Flo chatbot.

The British artificial intelligence company Deepmind was acquired by Google in 2014, and, since then, it has been making waves primarily in two sectors: games and healthcare. Deepmind’s AlphaGo program was the first ever to beat a professional human opponent at the game of Go in 2015, later taking down the world champion in a three-game match. Deepmind differs from Watson in that it uses only raw pixels as data input. In healthcare, a 2016 collaboration between Deepmind and Moorfields Eye Hospital was set up to analyze eye scans for early signs of blindness, and, in the same year, another partnership with University College London Hospital sought to develop an algorithm that could differentiate healthy tissue from cancerous tissue in the head and neck areas.

Other providers of cognitive solutions for enterprises include Cognitive Scale, which attempts to integrate intelligence based on machine learning into business processes by way of actionable insights, recommendations, and predictions. HPE Haven OnDemand provides what is in essence a big-data, cognitive-powered, curated search feature that allows users to access multiple structured and unstructured data formats. CustomerMatrix Cognitive Engine uses computer cognition to build recommendations on how to generate the greatest impact on revenue. It achieves this by analyzing past outcomes and presenting an actionable, ranked list of steps that, based on evidence, would likely increase revenue. And, Cisco Cognitive Threat Analytics analyzes web traffic based on source and destination as well as the nature of data being transmitted, and reports when sensitive data is being taken.

SparkCognition has three offerings: Spark Predict, a cognitive system for industry that uses sensor data to optimize operations and warn operators of likely failures; Spark Secure, which improves threat detection; and MindFabric, a platform for professionals to access “deep insights” from data. Numenta creates cognitive technology that specializes in detecting anomalies, whether in human behavior, geo-spatial tracking, or servers and applications. Expert System’s Cogito specializes in NLP across multiple languages, which enables organizations to better analyze masses of unstructured content.

Cognitive Computing at Work

Cognitive systems have already been put to work in a number of industries, where they’re able to supplement human intelligence in fascinating and sometimes surprising ways.

Vantage Software and IBM Watson, for example, combined cognitive computing with knowledge of private equity to create Coalesce, a software tool for investment managers that helps them analyze data about risk, company profiles and management, and the industry landscape, so they can make better investment decisions.

LifeLearn, which provides marketing and corporate solutions for veterinary businesses, used cognitive computing to create an application named LifeLearn Sofie, which helps veterinarians access veterinary data resources and ask for assistance with diagnoses to improve the quality of care.

WayBlazer, a cognitive-powered travel planning startup, uses NLP capabilities to personalize travel planning based on three sources of information: declared information (requested when a user signs up), observed information (from linked social media profiles), and inferred information (from previous activity on a travel planning application). The company uses APIs or online interfaces to come up with personalized travel recommendations for users.

Edge Up Sports brought cognitive analytics to fantasy football. With an app that takes on the research for fantasy football players by gathering unstructured sports analysis data to create a summary view of all the news on a player, the company gives fantasy football managers the ability draft better teams.

BrightMinded, a health and wellness company, created a cognitive-powered app for personal trainers called TRAIN ME, which uses clinical research and tracks user data to help trainers create personalized exercise regimens for their clients.

Cognitive Computing Helps Heal with Healthcare Applications

Given the massive amounts of data that healthcare professionals must navigate and the life-or-death stakes, the healthcare industry has attracted some especially ambitious cognitive computing projects.

The signature application of cognitive systems in healthcare is Watson for Oncology, a partnership between clinicians and analysts at Memorial Sloan Kettering in New York and IBM Watson. Based on decades of clinical data as well as learning directed by oncological subspecialists, Watson for Oncology was designed to identify evidence-based treatment options for cancer patients. One of the goals was to disseminate knowledge from a specialized cancer treatment center to facilities that don’t have the same resources; another was to increase the speed with which cancer research impacted clinical practice.

Another fascinating example of Watson at work in healthcare comes from the field of genomics, which deals with DNA. IBM Watson Genomics uses data from the sequencing and analysis of a tumor’s genomic makeup to reveal mutations that can then be compared with information from medical literature, clinical studies, and pharmacology to provide insights into the effects of mutations and therapy. The clinical data comes from OncoKB, a knowledge base maintained by Memorial Sloan Kettering that contains details of alterations in hundreds of cancer genes.

The implications of these still-groundbreaking applications are staggering. In the future, comprehensive healthcare systems powered by cognitive technologies might be able to collate all human knowledge around particular conditions, including both experimental results and patient histories, to diagnose disease and recommend evidence-based courses of treatment.

What’s the Problem with Cognitive Computing?

While the benefits from cognitive computing are great, AI does generate some fear. Some people are afraid it will do away with the need for human workers in a variety of jobs. Could cognitive computing challenge white-collar knowledge work the same way factory automation challenged blue-collar work in the 19th century?

The answer to this question, at least so far, is reassuring. As Bertrand Duperrin puts it, “A man with a machine will always beat a machine.” Cognitive computing, in its current form, is not going to replace most people; instead, it will extend their cognitive capabilities (called augmentation) and make them more effective in their roles. Historically, new technology has tended to push people into higher-value jobs rather than do away with jobs, some researchers say.

A 2017 survey of company leaders by Deloitte found that 69 percent of enterprises expected minimal to no job losses and some job gains from these technologies over the next three years.

Other concerns are a little more immediate and perhaps better founded. One has to do with algorithms and whether their ability to predict human behavior gives them the capacity to control humans in ways that are detrimental. Algorithms that power news aggregation services can conceivably reinforce our biases instead of paint a more accurate, nuanced picture of the world, as news is supposed to do.

Cognitive technologies can capture both structured and unstructured data and can paint a more holistic picture of human psychographics and behavior than has been possible before. For some people, this might be nothing more than another privacy-related headache. For others, it might create genuine concerns about being watched by Big Brother. There are even those, such as Tesla founder Elon Musk, who believe AI poses an existential threat to humanity and should be regulated.

Other issues with cognitive computing are more akin to growing pains. These include the lengthy, often laborious process of training a cognitive system via machine learning. Many organizations do not grasp that the data-intensive nature of cognitive systems and the slow training delay adoptions. Another shortcoming of cognitive computing is its inability to analyze risk in unstructured data from exogenous factors, such as the cultural and political environment.

What Are Cognitive Computing Chips?

In 2011, Nature published an article about a new “cognitive computing microchip” created by IBM. Different from conventional computers, which feature a separation of memory and computational elements, the new chips had their computational elements and RAM wired together. The chips were inspired by the structure of the brain: Computational elements act as neurons and RAM act as synapses that connect the neurons together. These neurons and synapses were simulated on a structure called a core, which is the building block of neuromorphic (brain-like) architecture. IBM’s TrueNorth chip, for example, comprises 4096 cores — each simulates 256 programmable neurons, and each neuron, in turn, has 256 programmable synapses.

Cognitive chips — or neurosynaptic chips, as they are sometimes called — are an alternative approach to cognitive computing systems. Where conventional cognitive computing seeks to mimic human cognitive functions using software, cognitive chips are designed to work like brains at the hardware level.

This design consumes much less power than conventional computing based on the traditional von Neumann architecture because it is event-driven and asynchronous. In conventional architectures, each calculation is allocated a fixed unit of time (in the region of billionths of a second) in a process called clocking, even if it does not need that much time to complete that calculation. So, time is wasted. The neurosynaptic chip only assigns each calculation as long as needed to be completed before firing off an impulse to begin the next one, like a relay. TrueNorth has a power density that is one ten-thousandth that of other microprocessors.

The “memtransistor,” created by researchers at Northwestern University’s McCormick School of Engineering, acts much like a neuron by performing both memory and information processing, meaning it is a closer technological equivalent to a neuron. Memtransistors can be used in electronic circuits and systems based on neuromorphic architectures.

To illustrate the difference between traditional computers and neurosynaptic chips, IBM uses a left brain-right brain analogy, where the left brain (traditional computing) focuses on language and analytical thinking, while the right (neurosynaptic chips) addresses the senses and pattern recognition. IBM says it hopes to meld the two capabilities to create what it terms a holistic computing intelligence.

The Future of Cognitive Computing

Although neurosynaptic chips are revolutionary in their design, they don’t begin to approach the complexity of the human brain, which has 80 billion neurons and 100 trillion synapses and is ridiculously power-efficient. IBM’s latest SyNAPSE chip has only 1 million neurons and 256 million synapses. Work continues on neuromorphic architectures that will approximate the structure of the human brain: IBM says it wants to build a chip system with 10 billion neurons and 100 trillion synapses that consumes just one kilowatt-hour of electricity and fits inside a shoebox.

One important milestone of the future is the exascale computer, a system that can perform a billion billion calculations per second, a thousand times more than petascale computers introduced in 2008. Exascale computing is thought to match the processing power of the human brain at the neural level. The U.S. Department of Energy has said that at least one of the forthcoming exascale machines hoped to be built by 2021 will use a “novel architecture.”

Another groundbreaking proposition for cognitive computing comes from scientists and researchers who want to harness the vast and proliferating amounts of scientific data. They propose the development of a cognitive “smart colleague” that could collate knowledge and contextualize it so that it could identify and accelerate research questions needed to meet overarching scientific objectives, and even facilitate the processes of experimentation and data review.

While IBM is still a global leader in cognitive computing, aiming to “cognitively enable and disrupt industries,” it faces competition from cognitive computing startups in specific sectors. Some of the more significant include the following: Narrative Science’s Quill, a platform that turns data into meaningful narratives; Digital Reasoning’s Synthesys, which uses unstructured information to create contextualized big-picture insights for its clients; and Flatiron Health, which, like Watson for Oncology, collects and analyzes oncology data to provide insights for medical practitioners.

To stay competitive, IBM has enlisted one of the rising stars of AI: 14-year-old Tanmay Bakshi, a prodigy who is partnering with the tech giant on healthcare projects.

An area of the cognitive computing landscape that will likely see major expansion is custom cognitive computing. Custom cognitive computing is based on the principle that developers who want to bring cognitive functions (such as computer vision and speech recognition) to their applications should not have to worry about the complex process of training these intelligent functions, since training requires creating massive curated datasets. Instead, custom cognitive computing allows developers to simply upload labeled data to the cloud and train the model iteratively until the desired accuracy is achieved. Custom cognitive computing offers a way to democratize machine learning for developers.

Cognitive computing also intersects with two other rising technologies: the Internet of Things (IoT) and blockchain technology. IoT refers to a complex system of devices and appliances that possess computing power and are connected to each other via the internet. Each device in the IoT can collect data, but there’s little being done with it so far. Cognitive computing promises to draw insights from this data in ways that will make IoT devices work better together. Collecting and Exchanging this data raises security risks, which is where blockchain technology comes in. Blockchain will improve data privacy for information collected from the IoT and will help create “marketplaces” for the exchange of data.

Further Reading to Learn More about Cognitive Computing

If you’re looking for more resources to learn about cognitive computing, here’s where to start:

Reynolds, H. & Feldman, S. (2014). “Cognitive Computing: Beyond the Hype.” KM World: 27.

The Future of Work with Automated Processes in Smartsheet

Empower your people to go above and beyond with a flexible platform designed to match the needs of your team — and adapt as those needs change.

The Smartsheet platform makes it easy to plan, capture, manage, and report on work from anywhere, helping your team be more effective and get more done. Report on key metrics and get real-time visibility into work as it happens with roll-up reports, dashboards, and automated workflows built to keep your team connected and informed.

When teams have clarity into the work getting done, there’s no telling how much more they can accomplish in the same amount of time. Try Smartsheet for free, today.